Zookeeper部署

网络Headless服务

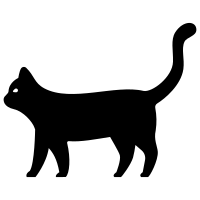

创建无头服务:

vim zookeeper-default-headless.yml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: zookeeper

app.kubernetes.io/instance: zookeeper-default

app.kubernetes.io/managed-by: Tiller

app.kubernetes.io/name: zookeeper

helm.sh/chart: zookeeper-5.1.1

name: zookeeper-default-headless

namespace: public

spec:

clusterIP: None

ports:

- name: client

port: 2181

protocol: TCP

targetPort: client

- name: follower

port: 2888

protocol: TCP

targetPort: follower

- name: election

port: 3888

protocol: TCP

targetPort: election

publishNotReadyAddresses: true

selector:

app.kubernetes.io/component: zookeeper

app.kubernetes.io/instance: zookeeper-default

app.kubernetes.io/name: zookeeper

kubectl create -f zookeeper-default-headless.yml kubectl -n public get svc

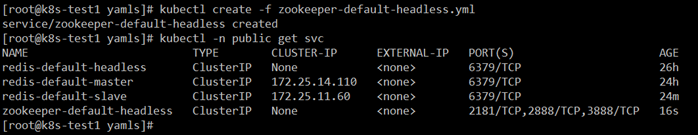

创建StatefulSet

部署用YAML文件:

vim zookeeper-default.yml

apiVersion: apps/v1

kind: StatefulSet

metadata:

labels:

app.kubernetes.io/component: zookeeper

app.kubernetes.io/instance: zookeeper-default

app.kubernetes.io/managed-by: Tiller

app.kubernetes.io/name: zookeeper

helm.sh/chart: zookeeper-5.1.1

name: zookeeper-default

namespace: public

spec:

podManagementPolicy: Parallel

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/component: zookeeper

app.kubernetes.io/instance: zookeeper-default

app.kubernetes.io/name: zookeeper

serviceName: zookeeper-default-headless

template:

metadata:

labels:

app.kubernetes.io/component: zookeeper

app.kubernetes.io/instance: zookeeper-default

app.kubernetes.io/name: zookeeper

name: zookeeper-default

spec:

containers:

- command:

- bash

- '-ec'

- >

# Execute entrypoint as usual after obtaining ZOO_SERVER_ID based

on POD hostname

HOSTNAME=`hostname -s`

if [[ $HOSTNAME =~ (.*)-([0-9]+)$ ]]; then

ORD=${BASH_REMATCH[2]}

export ZOO_SERVER_ID=$((ORD+1))

else

echo "Failed to get index from hostname $HOST"

exit 1

fi

exec /entrypoint.sh /run.sh

env:

- name: ZOO_PORT_NUMBER

value: '2181'

- name: ZOO_TICK_TIME

value: '2000'

- name: ZOO_INIT_LIMIT

value: '10'

- name: ZOO_SYNC_LIMIT

value: '5'

- name: ZOO_MAX_CLIENT_CNXNS

value: '60'

- name: ZOO_4LW_COMMANDS_WHITELIST

value: 'srvr, mntr'

- name: ZOO_SERVERS

value: >-

zookeeper-default-0.zookeeper-default-headless.public.svc.cluster.local:2888:3888

- name: ZOO_ENABLE_AUTH

value: 'no'

- name: ZOO_HEAP_SIZE

value: '1024'

- name: ZOO_LOG_LEVEL

value: ERROR

- name: ALLOW_ANONYMOUS_LOGIN

value: 'yes'

image: 'docker.io/bitnami/zookeeper:3.5.6-debian-9-r20'

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

tcpSocket:

port: client

timeoutSeconds: 5

name: zookeeper

ports:

- containerPort: 2181

name: client

protocol: TCP

- containerPort: 2888

name: follower

protocol: TCP

- containerPort: 3888

name: election

protocol: TCP

readinessProbe:

failureThreshold: 6

initialDelaySeconds: 5

periodSeconds: 10

successThreshold: 1

tcpSocket:

port: client

timeoutSeconds: 5

resources:

requests:

cpu: 100m

memory: 256Mi

securityContext:

runAsUser: 1001

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /bitnami/zookeeper

name: data

dnsPolicy: ClusterFirst

restartPolicy: Always

securityContext:

fsGroup: 1001

terminationGracePeriodSeconds: 30

updateStrategy:

type: RollingUpdate

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 8Gi

storageClassName: alicloud-nas

kubectl create -f zookeeper-default.yml kubectl -n public get statefulsets.apps

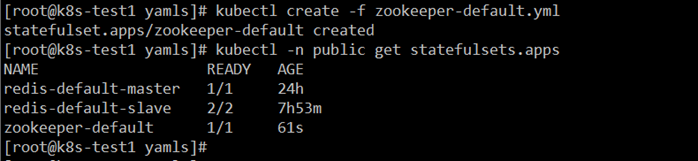

创建服务

创建SVC:

vim zookeeper-default-svc.yml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: zookeeper

app.kubernetes.io/instance: zookeeper-default

app.kubernetes.io/managed-by: Tiller

app.kubernetes.io/name: zookeeper

helm.sh/chart: zookeeper-5.1.1

name: zookeeper-default

namespace: public

spec:

ports:

- name: client

port: 2181

protocol: TCP

targetPort: client

- name: follower

port: 2888

protocol: TCP

targetPort: follower

- name: election

port: 3888

protocol: TCP

targetPort: election

selector:

app.kubernetes.io/component: zookeeper

app.kubernetes.io/instance: zookeeper-default

app.kubernetes.io/name: zookeeper

kubectl create -f zookeeper-default-svc.yml kubectl -n public get svc

RocketMQ部署

RocketMQ作为有状态服务,官方推出了RocketMQ Operator来方便k8s群集环境中部署,项目地址:https://github.com/apache/rocketmq-operator 。

部署RocketMQ Operator

由于使用Operator来部署RocketMQ,故先部署其:

git clone https://github.com/apache/rocketmq-operator.git cd rocketmq-operator/

运行脚本安装 RocketMQ Operator

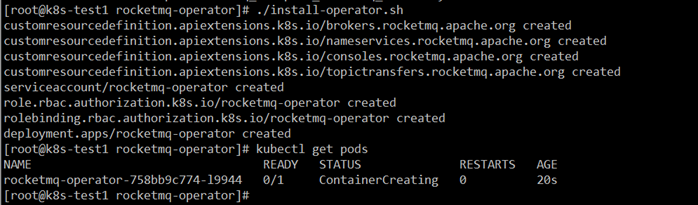

./install-operator.sh kubectl get pods

其会自动创建所需资源(Deployment,SVC,ConfigMap,Secret等)。

Operator是无状态的,故不需要配置NAS存储。

配置群集

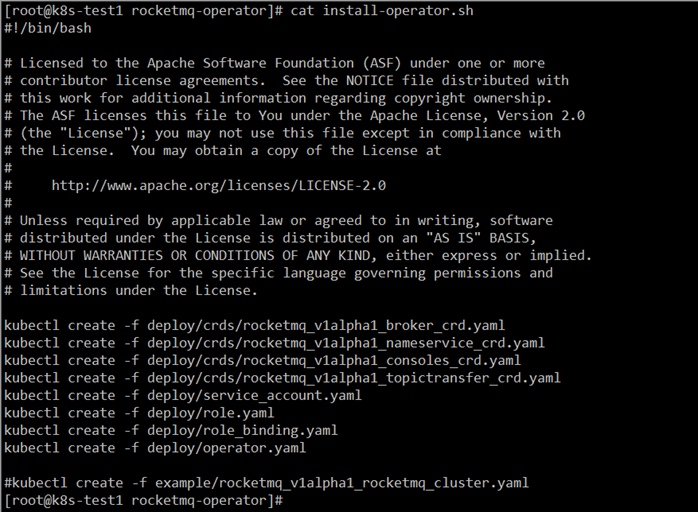

查看上面的安装脚本,你会看到最后有个注释行,其yaml文件就是创建cluster的应用文件:

cat install-operator.sh

即为 example/rocketmq_v1alpha1_rocketmq_cluster.yaml 文件。

Tips:此文件中的 storageMode: EmptyDir,表示存储使用的是 EmptyDir,数据会随着 Pod 的删除而抹去,因此该方式仅供开发测试时使用。一般使用 HostPath 或 StorageClass 来对数据进行持久化存储。使用 HostPath 时,需要配置 hostPath,声明宿主机上挂载的目录。使用 storageClass 时,需要配置 volumeClaimTemplates,声明 PVC 模版。具体可参考 RocketMQ Operator 文档。

这里我们使用阿里的NAS,故复制并修改相关配置:

cp -p example/rocketmq_v1alpha1_rocketmq_cluster.yaml rocketmq_v1alpha1_rocketmq_cluster.yaml vim rocketmq_v1alpha1_rocketmq_cluster.yaml

...

kind: Broker

...

resources:

requests:

memory: "1024Mi"

cpu: "250m"

limits:

memory: "4096Mi"

cpu: "500m"

...

# storageMode can be EmptyDir, HostPath, StorageClass

storageMode: StorageClass

...

volumeClaimTemplates:

- metadata:

name: broker-storage

spec:

accessModes:

- ReadWriteOnce

storageClassName: alicloud-nas

resources:

requests:

storage: 8Gi

...

kind: NameService

...

storageMode: StorageClass

...

volumeClaimTemplates:

- metadata:

name: namesrv-storage

spec:

accessModes:

- ReadWriteOnce

storageClassName: alicloud-nas

resources:

requests:

storage: 1Gi

...

kind: Console

...

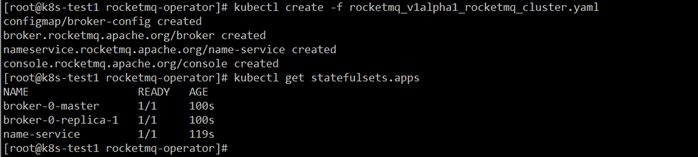

kubectl create -f rocketmq_v1alpha1_rocketmq_cluster.yaml kubectl get statefulsets.apps

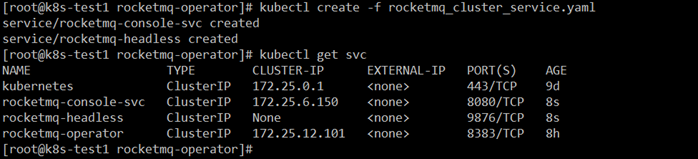

由于自带的example服务是以NodePort方式创建的,这里我做了修改,并且要与开发环境保持一致;故创建服务:

vim rocketmq_cluster_service.yaml

apiVersion: v1

kind: Service

metadata:

name: rocketmq-console-svc

labels:

app: rocketmq-console

spec:

selector:

app: rocketmq-console

ports:

- port: 8080

targetPort: 8080

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: rocketmq-headless

namespace: default

spec:

clusterIP: None

selector:

name_service_cr: name-service

ports:

- port: 9876

targetPort: 9876

protocol: TCP

kubectl create -f rocketmq_cluster_service.yaml kubectl get svc

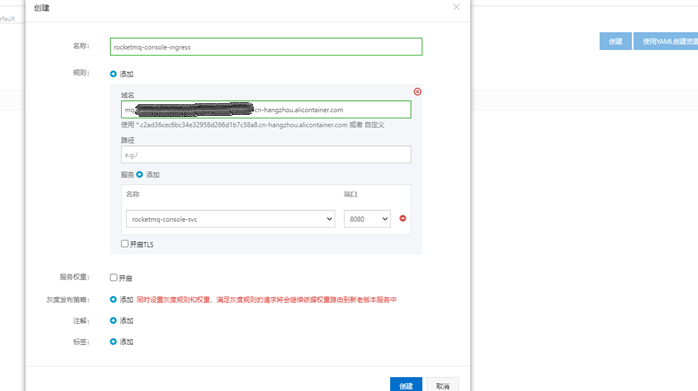

创建路由条目:

这里使用阿里送的五级域名,所以通过页面创建即可;创建好后就可以通过这个域名访问: